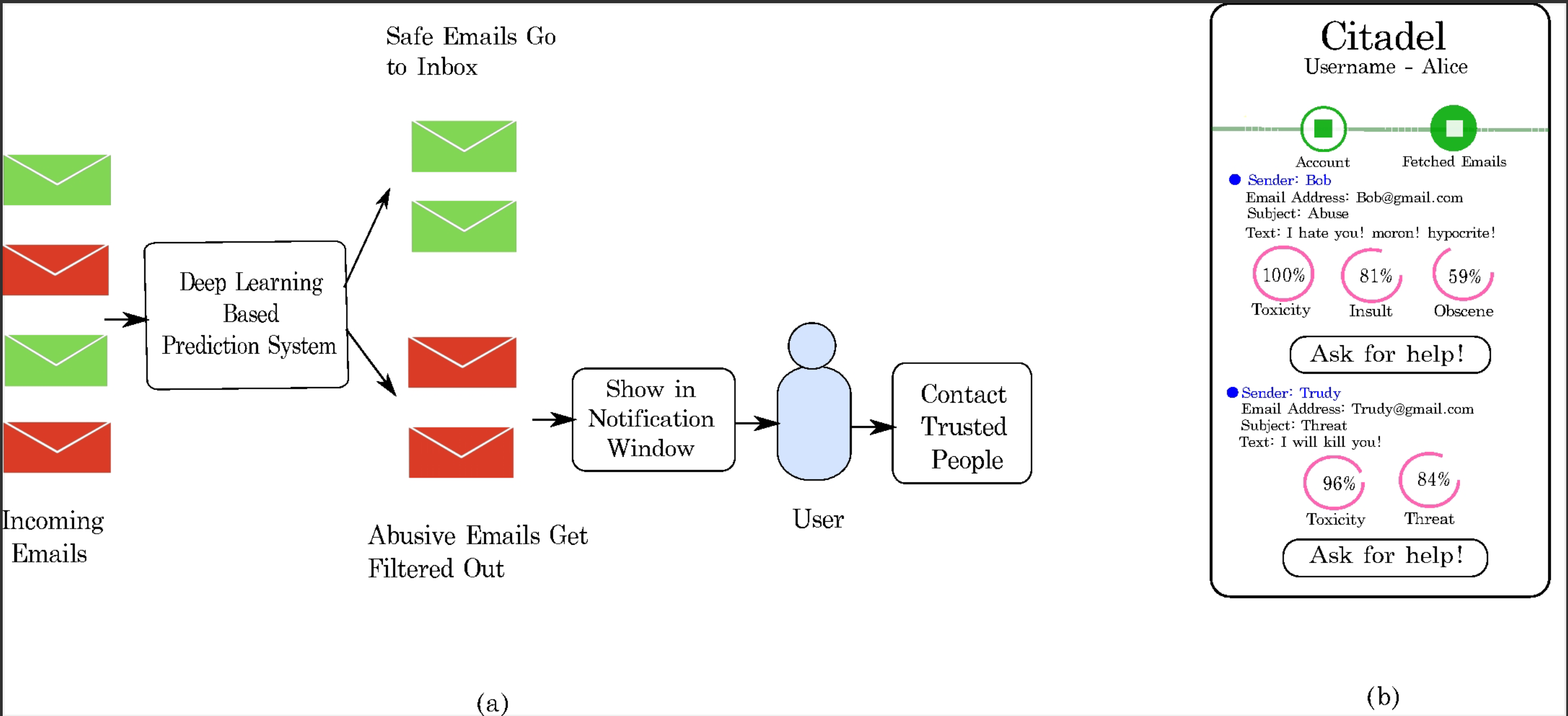

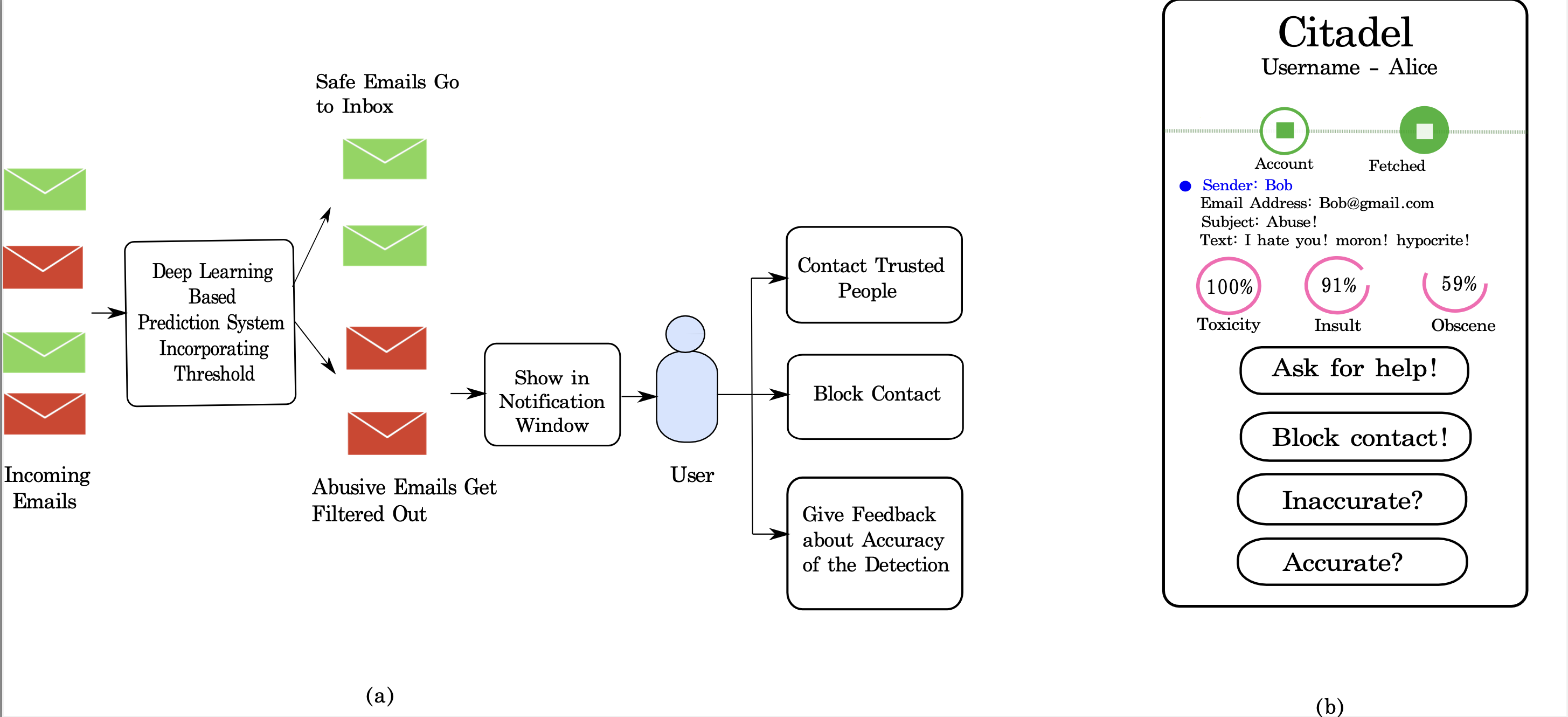

Backend

Engine

Deep neural network (CNN + LSTM + dense layers) detects abusive

content. A secure database stores user profile & trusted

contact metadata—never personal emails.

Data

Training

Due to privacy constraints around email storage, training used the

Kaggle Jigsaw toxic comment dataset (159,571 items; labels: toxic,

severe toxic, obscene, threat, insult, identity hate).

Model

Architecture

Inception-inspired convolutional branch + LSTM for long-range

dependencies; character- and word-level inputs to handle context

and misspellings. Test accuracy: ~98.4% on a 9,571-item split.

Frontend

Chrome Extension

Works within Gmail (with user permission). Shows notifications

with severity, “Ask for help!”, “Block Contact”, and

sender-threshold feedback controls.